AI adoption in software development is on fire—50% of devs now use it daily, a stunning leap from zero just two years ago. New tools wrapped around models like Claude, GPT or Gemini are getting better and better at generating accurate code at a super fast rate and AI coding has been one of the hottest areas of technology investment so far this year. Naturally, the blockchain dev community has jumped in, with daily buzz on X and Discord about someone trying one the tools to build a smart contract or a dApp for one of the blockchain ecosystems. At Breakpoint, many Solana developers told me they had experimented with AI tools but most hadn’t stuck with them.

The question we asked ourselves was: how useful are existing AI tools for blockchain developers?

The Analysis

We conducted an analysis that adhered to the scientific method as much as possible, with the same experiment applied systematically to a range of tools and models, aiming at producing results that are unbiased and can be easily verified. We limited the scope of the study to Solana. The purpose was to evaluate the capabilities of several AI models and coding tools in generating secure and functional Solana blockchain programs, particularly focusing on Vanilla/Native and the Anchor framework. Solana is known for its high-performance, low-latency architecture, so the limiting factor for a dApp performance becomes the program itself, in particular its efficiency in leveraging the network .

We picked ChatGPT-4o, Claude 3.5 (Claude Sonnet), NinjaTech Coder, and Gemini 1.5 Flash, either directly or through tools that directly leverages one of those models (eg. Cursor, Replit). By systematically testing these tools, the study aimed to determine their practical utility, particularly for those who may rely on AI assistance to streamline smart contract development.

Methodology

We tested two use cases that we described through two different prompts. Since the way the prompt is written can be a limiting factor in the quality of the result, we sought to eliminate that impact as much as possible by writing prompts that specified the dApp in much more detail than a typical user, for instance explicitly calling out methods, data types etc.

An example of such detailed prompt is below:

“Create a Solana program that implements an escrow system for exchanging tokens between two parties. It allows a party to create an offer to exchange a specific amount of one token for another token. The other party can then accept the offer, and the program will transfer the tokens accordingly. The program’s workflow is as follows:

- Create Offer:

- Party one initiates an offer by providing the following information:

- The amount of tokens they are willing to send (offer_amount).

- The mint address of the token they are sending (offer_token).

- The amount of tokens they expect to receive in return (ask_amount).

- The mint address of the token they expect to receive (ask_token).

- The public key of the other party (party_two).

- The program creates an offer details account, which stores the offer information.

- The program transfers the offer_amount from party one’s send_account to a temporary account (temp_account) controlled by a PDA (Program Derived Address).

- Accept Offer:

- Party two accepts the offer by providing their public key and the necessary token accounts.

- The program verifies that the offer details account exists and that the provided token accounts are valid.

- The program transfers the ask_amount from party two’s send_account to party one’s receive_account.

- The program transfers the offer_amount from the temp_account to party two’s receive_account.

- Close Offer:

- Party one can close the offer if it is not accepted.

- The program transfers the offer_amount from the temp_account back to party one’s receive_account.

The program uses a PDA to act as the authority for the temp_account, ensuring that only the program can transfer tokens from it. This prevents either party from stealing the tokens before the offer is accepted or closed.”

In order to evaluate the inference results of the models and tools, we determined a set of predefined criteria, focusing on the aspects of Solana development we thought were the most important when evaluating the quality of blockchain code. Each tool was tasked with generating both Solana Vanilla/Native and Anchor-based programs, which were then evaluated against our metrics. These included project structure, code compilation success, functionality, correctness of program instructions, parameter handling, account management, security checks, use of serialization/deserialization methods, optimization for compute units, Cross-Program Invocation (CPI) calls, and dependency management. The assessment also covered whether the AI-generated code adhered to best practices, used the latest dependencies, and handled security-critical operations like signer verification. By comparing the performance of each tool, this study provides a detailed analysis of the current state of AI-driven coding support for Solana blockchain projects.

Top 6 Takeaways from the AI Tools’ Benchmarking Study on Solana Code

- Inability to Produce Compilable Code Consistently: Across all evaluated tools, none were able to generate Solana Vanilla/Native or Anchor-based programs that compiled without errors on the first attempt. In most cases, significant manual intervention was required to correct various issues, such as syntax errors, outdated dependencies, or improper account definitions. This indicates that generic AI models are not yet reliable for producing functional Solana code directly out of the box.

Example: The following failed compilation was produced by an AI model that couldn’t generate a full Solana program project; in the case below, the user needs to copy and paste each AI generated file into a project. This process can be error prone, especially when the user doesn’t have the required experience.

- (Code generated by AI doesn’t compile due to the errors related to “use of undeclared type

EscrowError”, “use of undeclared crate or module”, no scope types in struct)

- In another case the AI didn’t import (use) the correct dependencies, causing the compilation process to fail.

- In the

accept_offerandclose_offerfunctions, the code referencestemp_accountto derive the Program Derived Address (PDA) and obtain the bump seed. However, temp_account is not included in the account context structs for these functions.

2. Inadequate Security and Compliance Checks: A common shortfall observed across the tools was the lack of proper security measures in the generated code. Many instances were identified where the AI-generated code failed to include critical checks, such as ensuring accounts were correctly marked as signers, validating ownership, or confirming address lengths. Additionally, key practices like handling unchecked arithmetic operations were often overlooked, raising concerns about the security of the generated programs.

- Defined party_two as AccountInfo and not performing any type of check, in addition to not adding the /// CHECK comment, not allowing the program to compile. https://www.anchor-lang.com/docs/the-accounts-struct#safety-checks

- The instruction implementation it doesn’t verify that the party_one_token_account owner field equals party_one pubkey https://docs.rs/spl-token/7.0.0/spl_token/state/struct.Account.html#structfield.owner

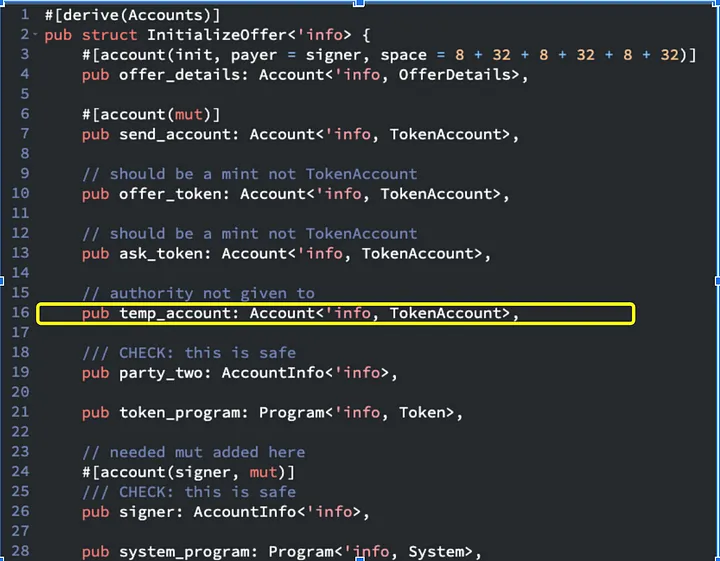

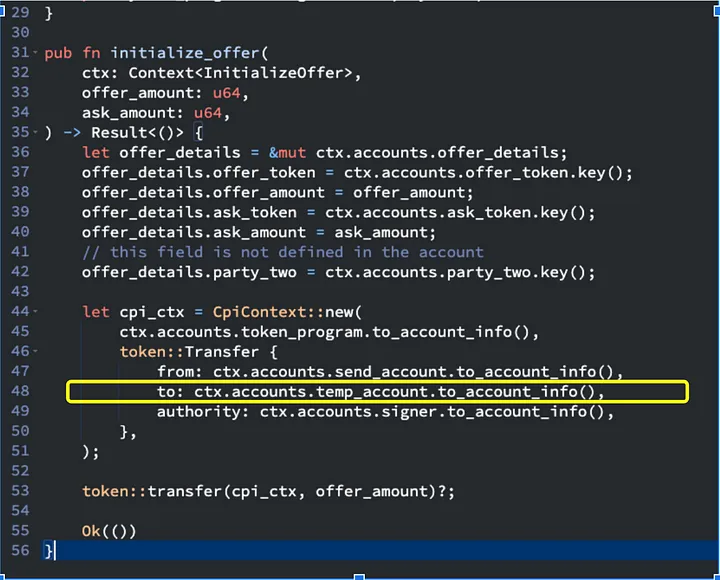

3. Incorrect Handling of Accounts and Instructions: The tools frequently made errors in how Solana program instructions were structured, particularly in handling accounts and Pubkeys. For instance, Pubkeys were often incorrectly received as AccountInfo objects, and accounts that required mutability were not correctly marked. This not only led to issues in execution but also reflected a fundamental misunderstanding of how Solana’s account system functions, further complicating code correctness and optimization.

- In this case we are transferring to temp_account but temp_account is not marked as mutable

4. Outdated or Improper Dependencies Usage: The generated code often relied on outdated versions of dependencies or included unnecessary ones, demonstrating a lack of awareness of the latest Solana ecosystem standards. Some tools even used deprecated functions, which could lead to potential vulnerabilities or compatibility issues in production environments. This highlights the tools’ insufficient alignment with current best practices in Solana development.

5. Confusion Between Rust and TypeScript Environments: In several cases, the models confused Rust code with TypeScript, leading to code that was entirely off-target for Solana development. This confusion suggests that these tools lack the nuanced understanding needed to differentiate between programming contexts, which is critical when working in blockchain environments where specificity is crucial. It shows that while AI can generate code, it still struggles to understand the distinct requirements of different programming frameworks.

6. Lack of Secure Handling for Critical Solana Program Elements: One of the most concerning takeaways was the consistent failure to implement essential security measures, such as signer verification, and the use of Program Derived Addresses (PDAs). Many of the generated programs either mishandled or entirely omitted these checks, leaving them vulnerable to exploits, such as unauthorized access or fund leakage. The AI generated code always defaulted to insecure patterns, which would expose the programs to potential attacks if deployed on a live network. This underscores the need for thorough manual review and security auditing when using AI-generated code for Solana smart contracts with these generic tools.

Conclusion

AI-generated code delivers an instant “wow” factor the first time a developer uses it, mostly due to its speed. But the devil is in the details—compiling the code, integrating it with a client, or auditing it often reveals obvious and subtle bugs. Some are easy to catch, others take hours or days, and many could have dire consequences for a blockchain developer if they were to slip through to production, because code is money in web3. While tools like ChatGPT, Claude, and Gemini are advancing code generation in Web2, our benchmarking study shows they fall far way short for reliable Solana smart contracts. These models may understand Rust and Solana syntax, but they lack the deep, specialized knowledge needed for secure and efficient blockchain coding. Developers should proceed with caution—these generic models and tools simply aren’t ready for blockchain. Without AI tailored to Web3’s unique demands, the blockchain community risks falling behind in the AI revolution.

I’ll keep posting on the topic of blockchain development, Solana and AI. I recommend you to follow @CodigoPlatform on X for additional content as well.

Author: JP Marcos, CEO and Founder